Etmann et al. Connection between robustness and interpretability

On the Connection Between Adversarial Robustness and Saliency Map Interpretability

Advantage and Disadvantages of adversarial training?

While this method – like all known approaches of defense – decreases the accuracy of the classifier, it is also successful in increasing the robustness to adversarial attacks

Connections between the interpretability of saliency maps and robustness?

saliency maps of robustified classifiers tend to be far more interpretable, in that structures in the input image also emerge in the corresponding saliency map

How to obtain saliency maps for a non-robustified networks?

In order to obtain a semantically meaningful visualization of the network’s classification decision in non-robustified networks, the saliency map has to be aggregated over many different points in the vicinity of the input image. This can be achieved either via averaging saliency maps of noisy versions of the image (Smilkov et al., 2017) or by integrating along a path (Sundararajan et al., 2017).

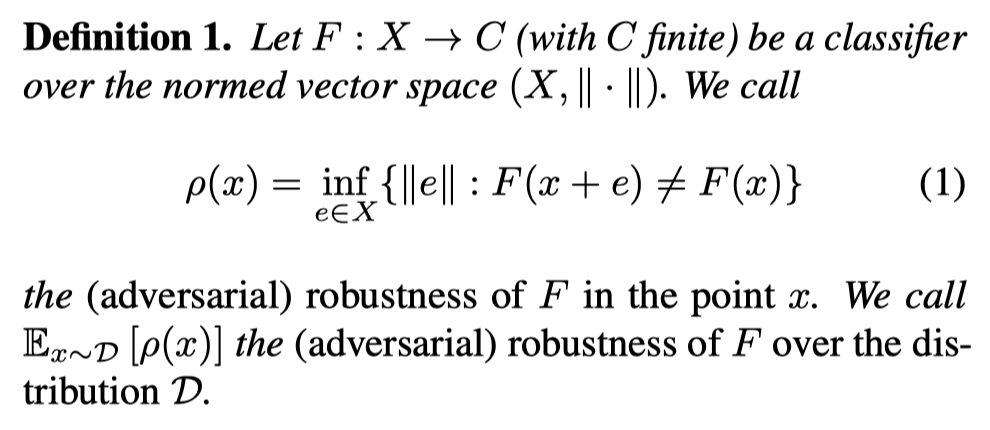

Definition of the (adversarial) robustness

Put differently, the robustness of a classifier in a point is nothing but the distance to its closest decision boundary.

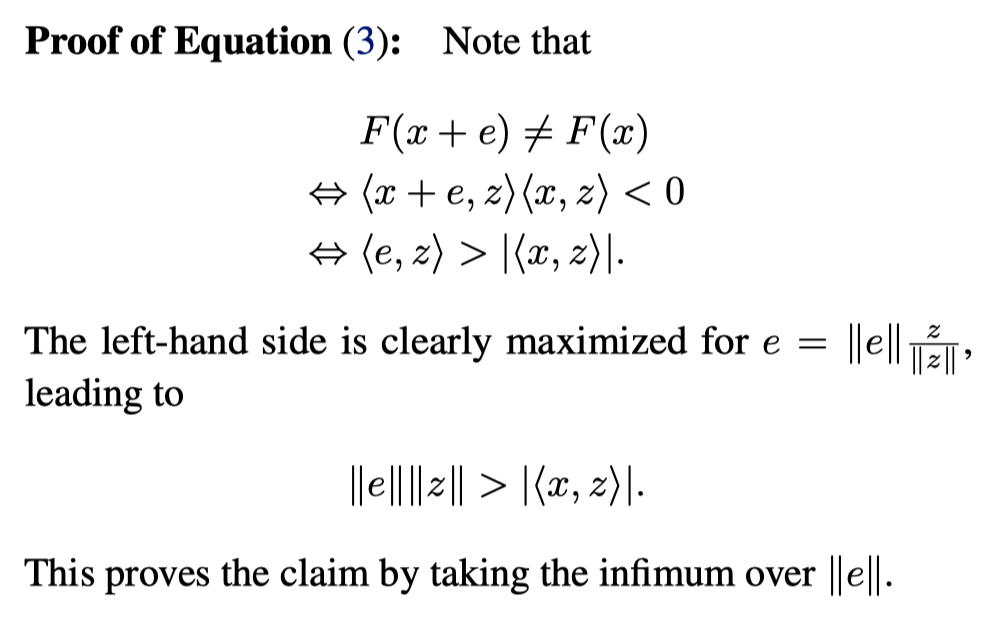

Prove: for linear binary cases

See OneNote page for details.

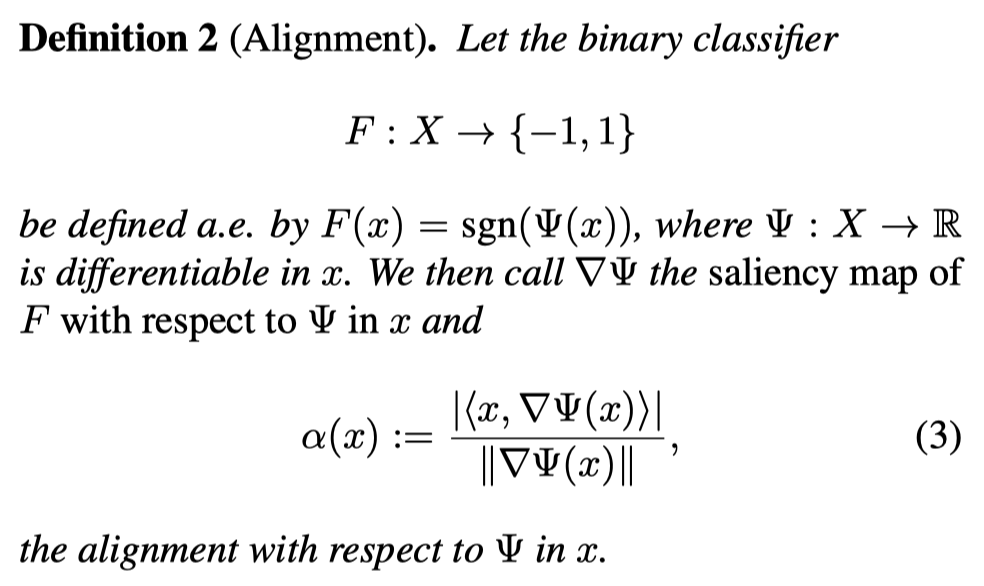

Definition of Alignment

- a.e. stands for almost everywhere

Relationship between alignment and linear robustness?

For a linear binary classifier, the alignment trivially increases with the robustness of the classifier.

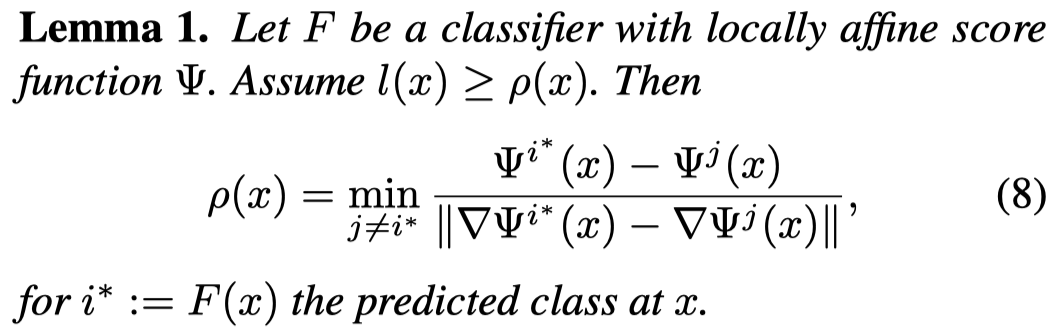

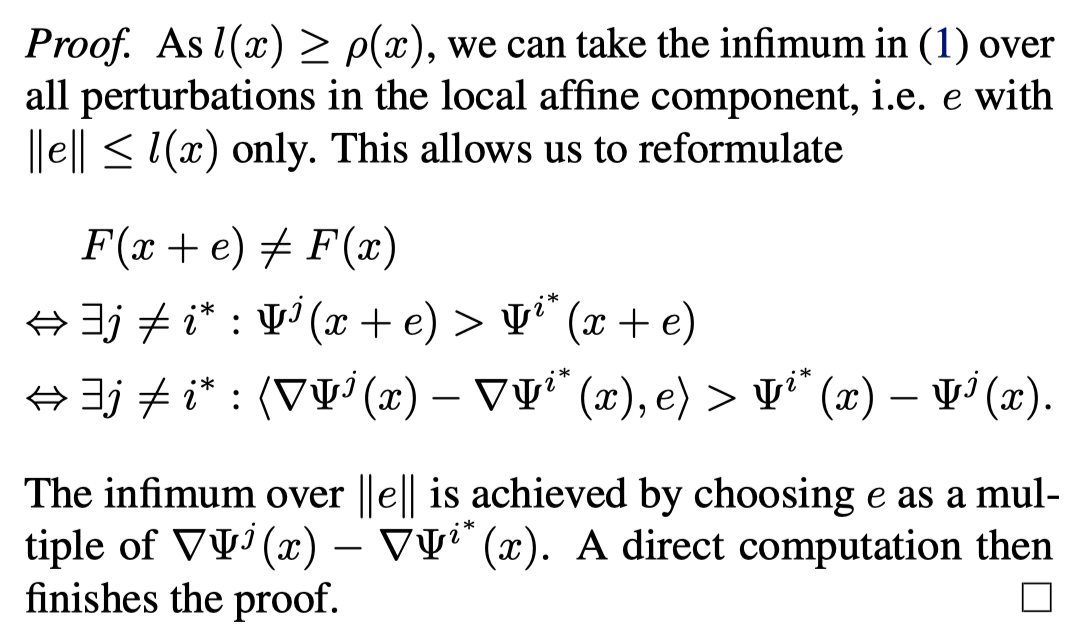

Prove Lemma 1

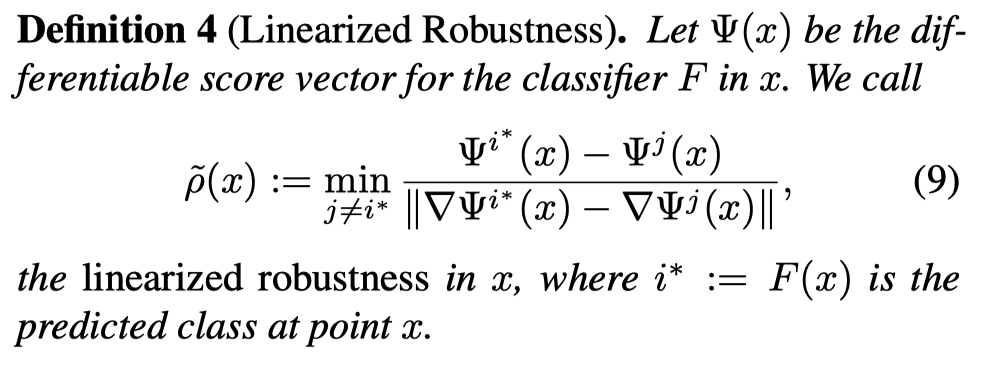

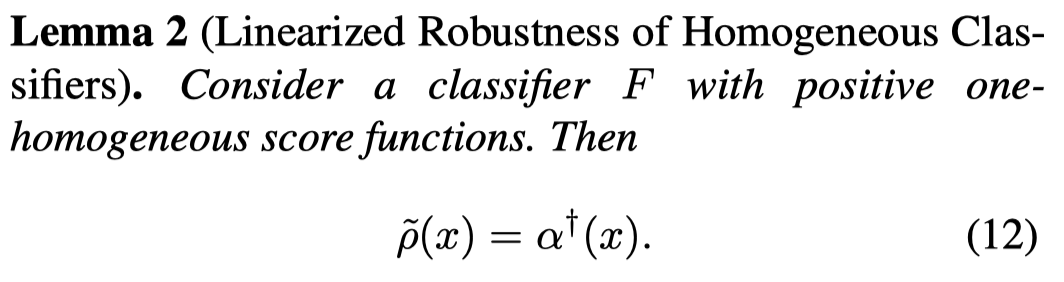

Definition of Linearized Robustness

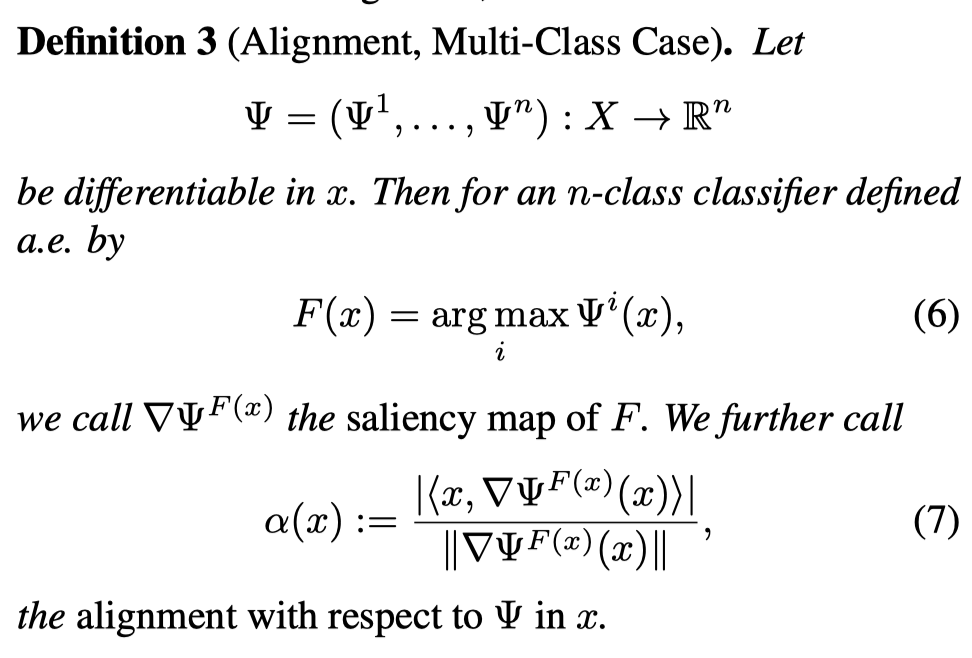

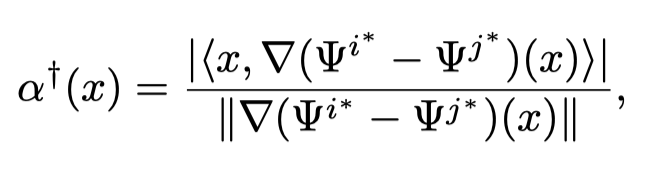

Binarized respective alignment

where is the minimizer of Eq (9),

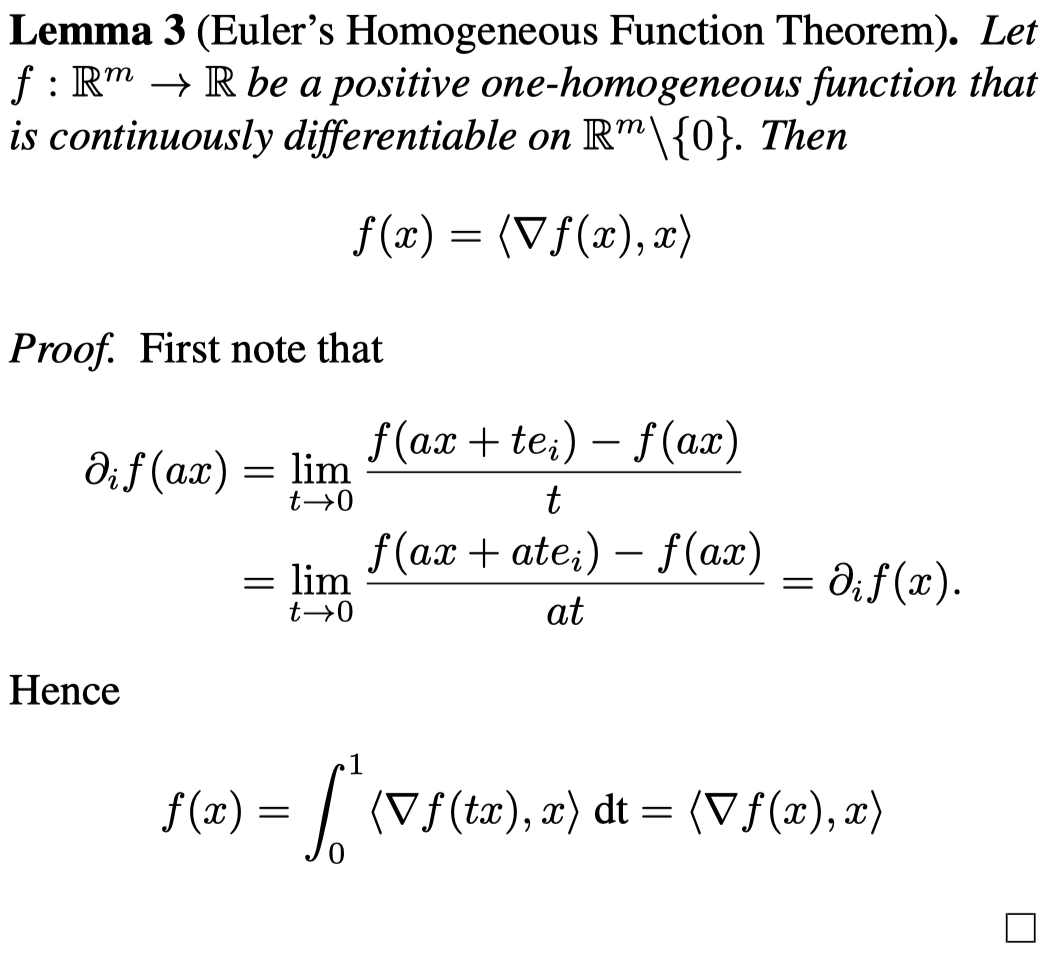

Positive one-homogeneous function

Any such function satisfies for all and .

Prove Lemma 2

Lemma 2 is a direct extension of 3.

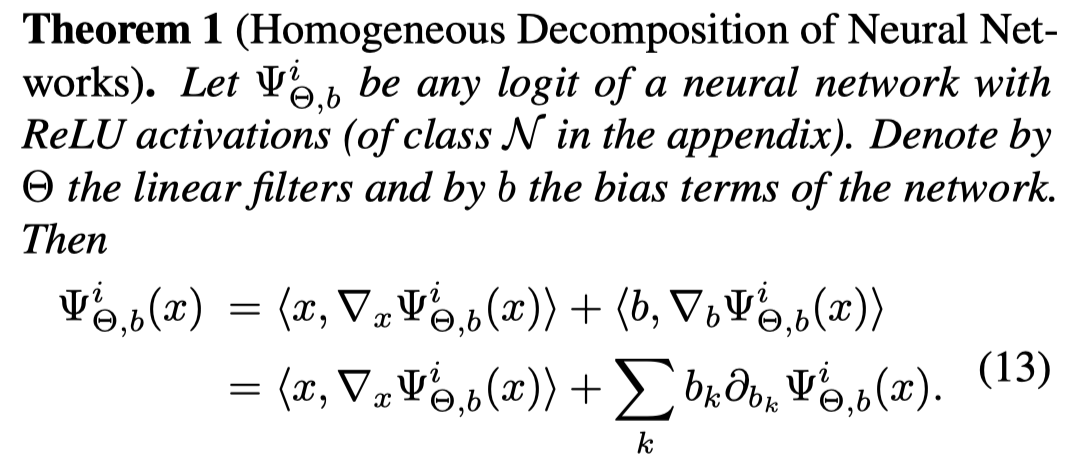

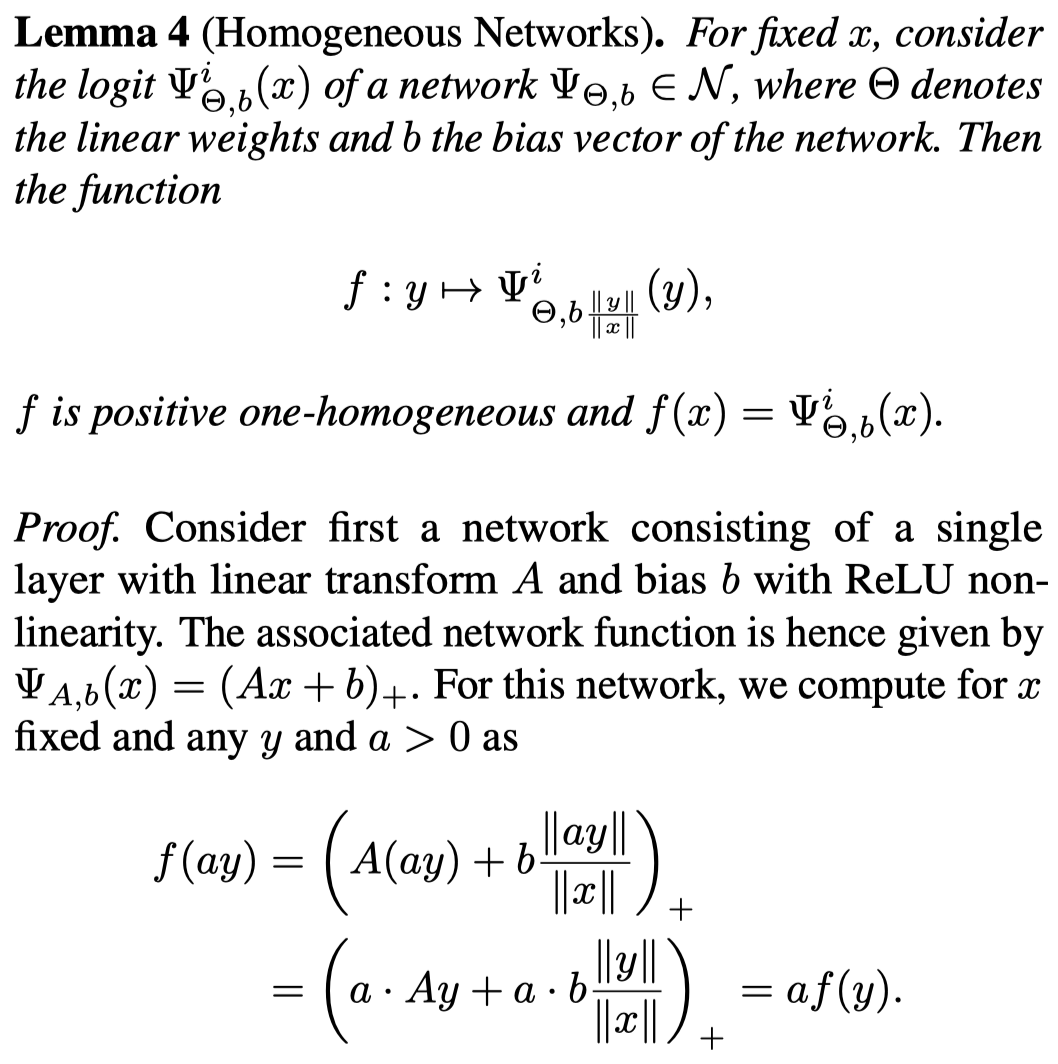

Prove Theorem 1

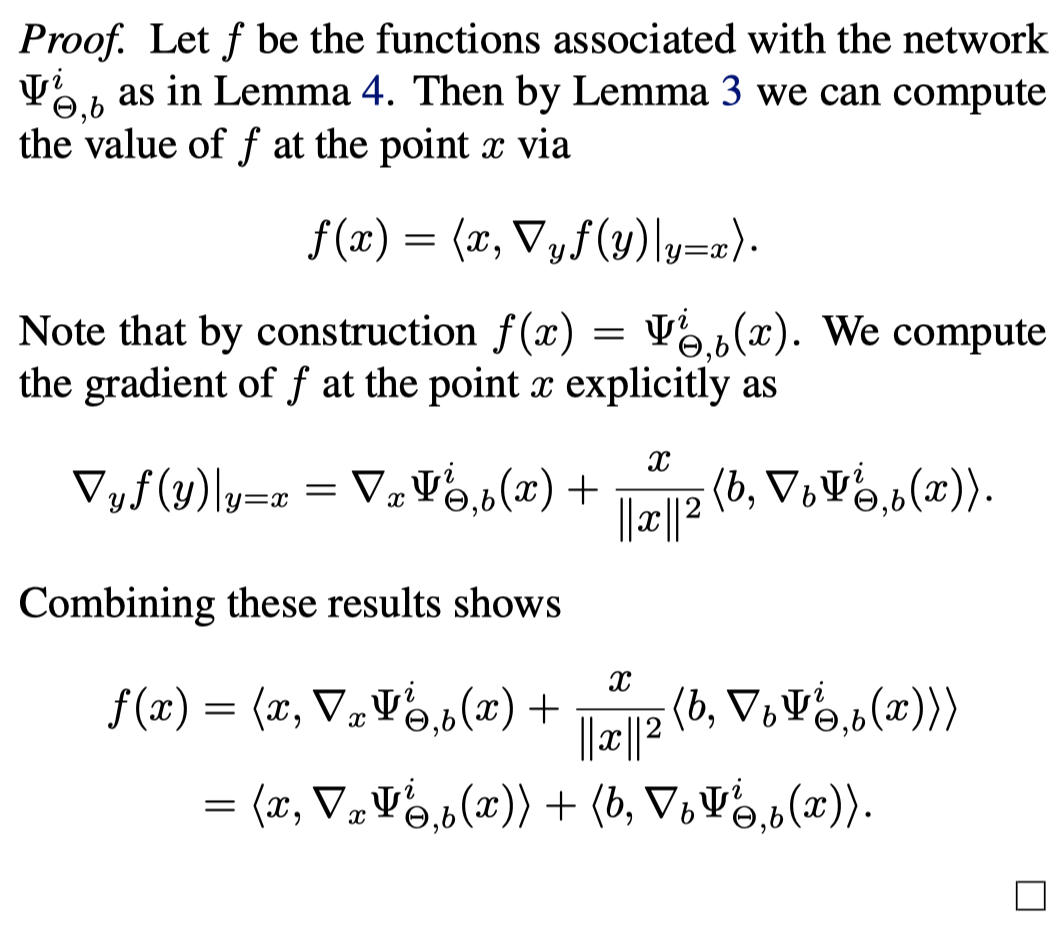

Proof of Theorem 1

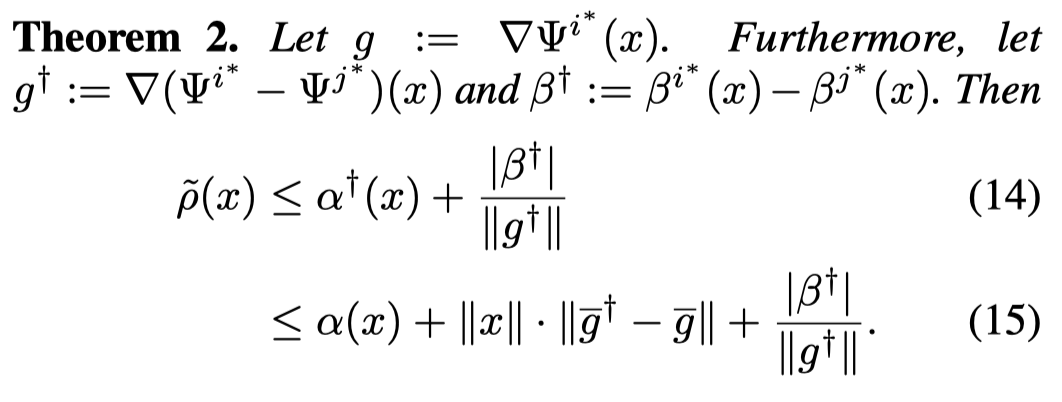

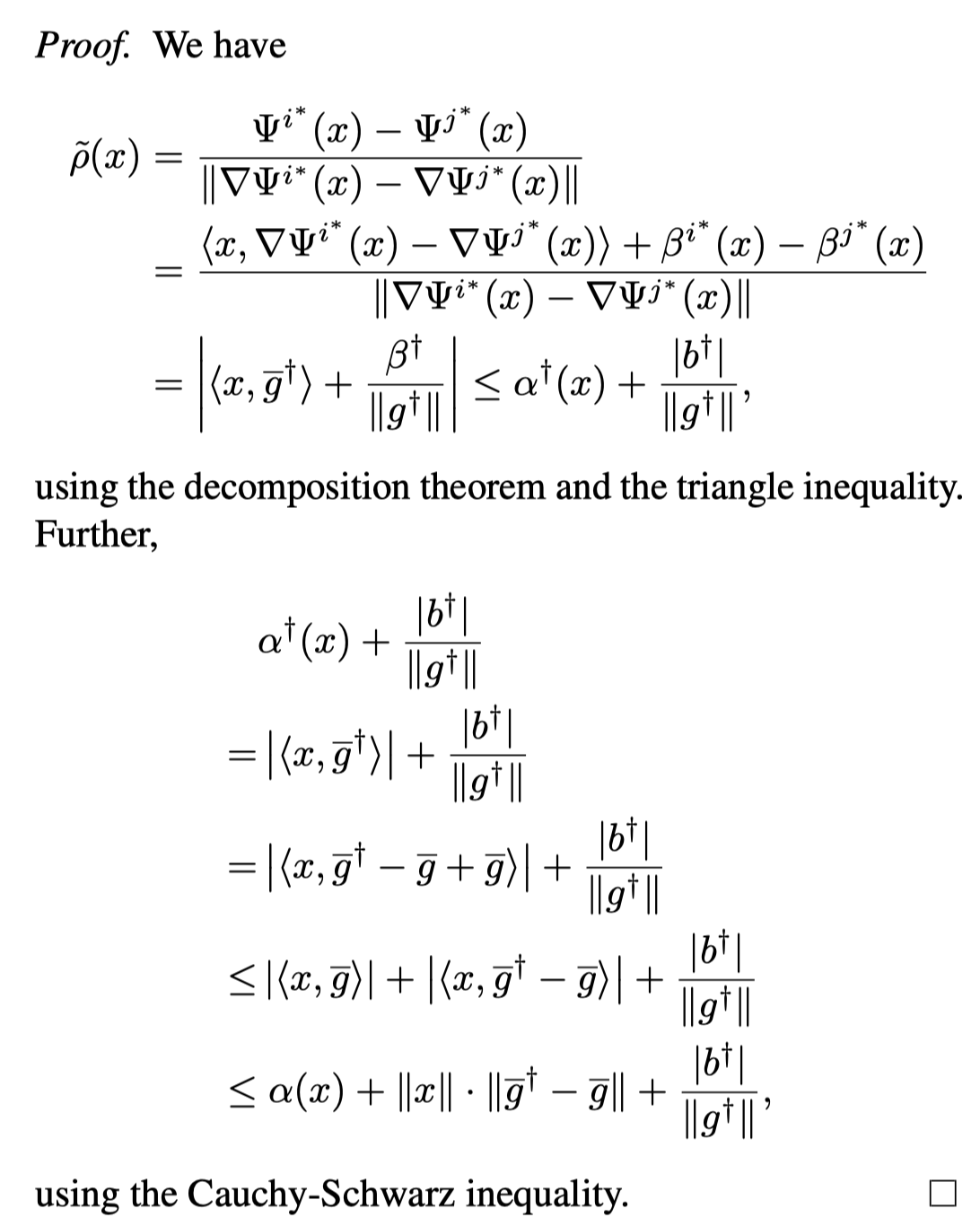

With and for , prove Theorem 2.

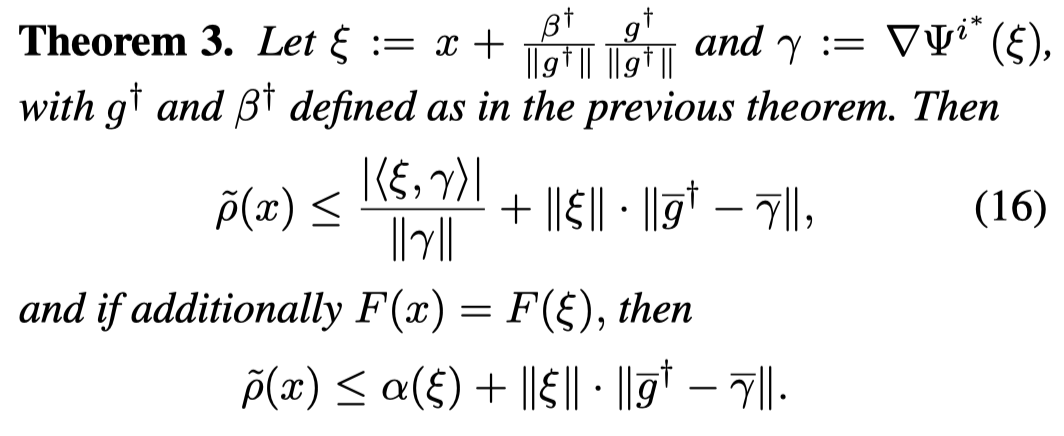

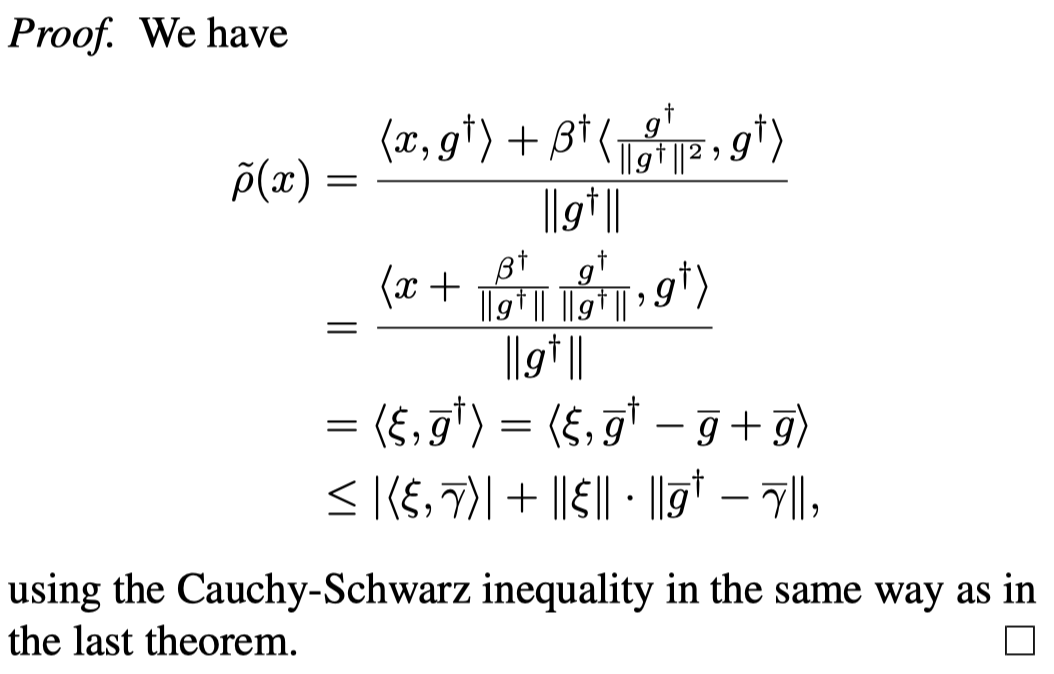

Prove Theorem 3

Note that the last equality is a typo by the authors, it is actually

Connection between Alignment and Interpretability

For most types of image data an increase in alignment in discriminative regions should coincide with an increase in interpretability.

Comments

Post a Comment